Big Data Patterns, Mechanisms > Mechanisms > Processing Engine

Processing Engine

![]()

The processing engine is responsible for processing data, usually retrieved from storage devices, based on pre-defined logic, in order to produce a result. Any data processing that is requested by the Big Data solution is fulfilled by the processing engine.

A Big Data processing engine utilizes a distributed parallel programming framework that enables it to process very large amounts of data distributed across multiple nodes. It requires processing resources that they request from the resource manager.

Processing engines generally fall into two categories

- A batch processing engine that provides support for batch data processing, where processing tasks can take anywhere from minutes to hours to complete. This type of processing engine is considered to have high latency.

- A realtime processing engine that provides support for realtime data processing with sub-second response times. This type of processing engine is considered to have low latency.

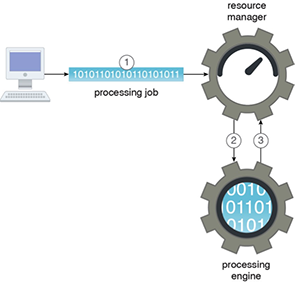

The Big Data solution’s processing requirements dictate the type of processing engine that is used. Figure 1 provides an example where a processing job is forwarded to a processing engine via the resource manager.

Figure 1 – A processing job is submitted to the resource manager (1). The resource manager then allocates an initial set of resources and forwards the job to the processing engine (2), which then requests further resources from the resource manager (3).

Related Patterns:

- Automated Dataset Execution

- Automated Processing Metadata Insertion

- Canonical Data Format

- Cloud-based Big Data Processing

- Complex Logic Decomposition

- Data Size Reduction

- Dataset Decomposition

- Dataset Denormalization

- Direct Data Access

- File-based Sink

- High Velocity Realtime Processing

- Intermediate Results Storage

- Large-Scale Batch Processing

- Processing Abstraction

- Realtime Access Storage

- Relational Sink

- Relational Source

- Streaming Egress

- Streaming Storage

This pattern is covered in BDSCP Module 2: Big Data Analysis & Technology Concepts.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.