Big Data Patterns, Mechanisms > Data Processing Patterns > Processing Abstraction

Processing Abstraction (Buhler, Erl, Khattak)

How can different distributed processing frameworks be used to process large amounts of data without having to learn the programmatic intricacies of each framework?

Problem

Solution

Application

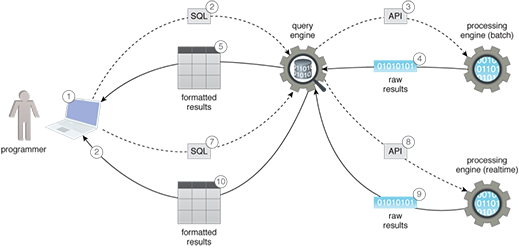

A query engine is used to transform the commands entered by the user into low-level API calls for invoking a particular processing engine. The processing engine then creates a processing job to perform the required data processing based on the data stored in the storage device. Once the results have been computed, they are formatted by the query engine into a grid-like structure and presented to the user.

It is important to note that data security can become an issue if the data access via the query engine is not secured because unauthorized tech-savvy users can simply access data through the execution of a simple-to-learn scripting language. Hence, the application of the Centralized Access Management pattern may further be required to ensure that only authorized users can manipulate data via the query engine.

The divide-and-conquer rule is applied by rethinking the complex logic, which needs to be executed as a monolithic task, in terms of combinable multiple smaller tasks where each task executes comparatively simpler logic. These tasks are then chained together to obtain the final output, as envisioned though the use of the original complex logic.

- A programmer requires processing some data as a batch operation.

- Although the programmer knows how to interact with the batch processing engine’s API, they use a query engine to write a script via its SQL-like interface.

- The query engine transforms the script into the low-level batch processing, engine-specific API and forwards it to the batch processing engine.

- The batch processing engine processes the data, and the computed results are returned to the query engine.

- The query engine formats the raw computed results before forwarding the results to the programmer.

- Next, the programmer requires processing some data in realtime.

- The programmer does not know how to interact with the realtime processing engine’s API, so they use the query engine to write a script via its SQL-like interface.

- The query engine transforms the script into the low-level realtime processing, engine-specific API and forwards it to the realtime processing engine.

- The realtime processing engine processes the data, and the computed results are returned to the query engine.

- The query engine formats the raw computed results before forwarding the results to the programmer.

This pattern is covered in BDSCP Module 10: Fundamental Big Data Architecture.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.

The official textbook for the BDSCP curriculum is:

Big Data Fundamentals: Concepts, Drivers & Techniques

by Paul Buhler, PhD, Thomas Erl, Wajid Khattak

(ISBN: 9780134291079, Paperback, 218 pages)

Please note that this textbook covers fundamental topics only and does not cover design patterns.

For more information about this book, visit www.arcitura.com/books.