Big Data Patterns, Mechanisms > Data Processing Patterns > High Velocity Realtime Processing

High Velocity Realtime Processing (Buhler, Erl, Khattak)

How can high velocity data be processed as it arrives without any delay?

Problem

Solution

Application

Mechanisms

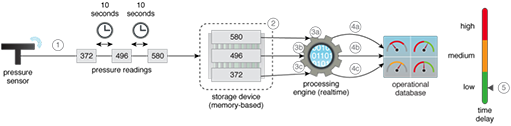

An event data transfer engine is used to continuously capture streams of data. The incoming stream of data can be pre-processed while in transit before it gets stored to a memory-based storage device. A realtime processing engine, such as Spark, is then used to process the data stored in the memory-based storage device.

The High Velocity Realtime Processing pattern is applied together with the Streaming Source and Realtime Access Storage patterns due to the requirements of the availability of current data and low latency data access, respectively.

Although enabling realtime analyses, the application of this pattern results in a complicated and costly data processing solution because of the inclusion of the event data transfer engine (complexity) and memory-based storage device (expensive).

Data is constantly acquired from the streaming source and is kept in memory, from where it is processed instantaneously as each datum arrives. Using memory as a storage medium helps remove latency when compared to using a disk as a storage medium.

- A sensor provides pressure readings every 10 seconds.

- The readings are saved to a memory-based storage device.

- (a,b,c) A realtime processing engine is used to instantly process the individual reading.

- (a,b,c) An operational dashboard is updated each time an individual reading is processed.

- The entire process of processing each reading takes a very short time to complete, resulting in up-to-date information.

This pattern is covered in BDSCP Module 11: Advanced Big Data Architecture.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.

The official textbook for the BDSCP curriculum is:

Big Data Fundamentals: Concepts, Drivers & Techniques

by Paul Buhler, PhD, Thomas Erl, Wajid Khattak

(ISBN: 9780134291079, Paperback, 218 pages)

Please note that this textbook covers fundamental topics only and does not cover design patterns.

For more information about this book, visit www.arcitura.com/books.