Big Data Patterns, Mechanisms > Data Transfer and Transformation Patterns > Dataset Denormalization

Dataset Denormalization (Buhler, Erl, Khattak)

How can a dataset, where related attributes are spread across more than one record, be stored in a way that lends itself to distributed data processing techniques that process data on a record-by-record basis?

Problem

Solution

Application

Mechanisms

A batch processing engine is used to apply denormalization logic, which generally involves making use of the record parsing functionality provided by the processing engine. The default record parsing functionality is modified such that multiple consecutive lines appearing in the file, which collectively contain all attribute values of a single logical record, are read as a single record. A new dataset is then written out where all the attributes that form part of the same entity are output in a single line. In case the processing engine does not support customizing the default record parsing behavior, the implementation of this pattern will involve complex data wrangling operations. The same technique can be applied to denormalize hierarchical data.

The Dataset Denormalization pattern is generally applicable in scenarios where the related attribute values appear consecutively, such as consecutive lines in a comma delimited file.

All the fields that form part of the same logical record are brought together by joining separate records that appear in the file into a single record, and a new dataset is created. The newly created dataset is then used to execute the required algorithm.

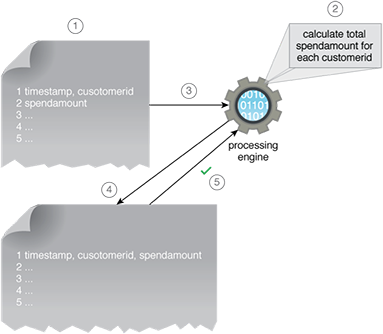

- A comma delimited file consists of customer spending records for multiple customers across multiple dates. The timestamp and the customer ID field are stored in one line, while the spend amount is stored in the next line.

- The file needs to be processed to find the total amount spent by each customer. However, the file cannot be processed as is because the processing engine parses the file on a line-by-line basis and can only reference field values that occur in the same line.

- Data in the file is first denormalized using a batch processing engine.

- The denormalized dataset consists of the three field values occurring on the same line.

- The file is successfully processed by the processing engine to find the total spend amount, as all the required field values that need to be referenced occur in the same line.

This pattern is covered in BDSCP Module 11: Advanced Big Data Architecture.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.

The official textbook for the BDSCP curriculum is:

Big Data Fundamentals: Concepts, Drivers & Techniques

by Paul Buhler, PhD, Thomas Erl, Wajid Khattak

(ISBN: 9780134291079, Paperback, 218 pages)

Please note that this textbook covers fundamental topics only and does not cover design patterns.

For more information about this book, visit www.arcitura.com/books.