Big Data Patterns, Mechanisms > Mechanisms > Compression Engine

Compression Engine

![]()

Compression is the process of compacting data in order to reduce its size, whereas decompression is the process of uncompacting data in order to bring the data back to its original size. A compression engine provides the ability to compress and decompress data in a Big Data platform.

In Big Data environments, there is a requirement to acquire and store as much data as possible in order to derive the largest potential value from analysis. However, if data is stored in an uncompressed form, the available storage space may not be efficiently utilized. As a result, data compression can be used to effectively increase the storage capacity of disk/memory space. In turn, this helps to reduce storage cost.

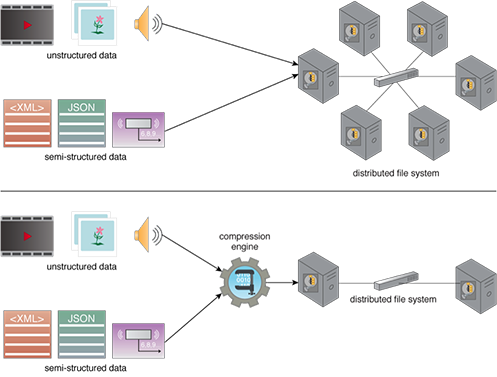

In Figure 1, a large amount of semi/unstructured data needs to be stored in a distributed file system.

Figure 1 – In the top diagram, storing data in its decompressed form requires six disks. In the bottom diagram, however, a compression engine is used to compress the data. As a result, only two disks are required to store the same amount of data in a compressed form.

Related Patterns:

This pattern is covered in BDSCP Module 10: Fundamental Big Data Architecture.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.