Cloud Computing Patterns, Mechanisms > Mechanisms > I - P > Load Balancer

Load Balancer

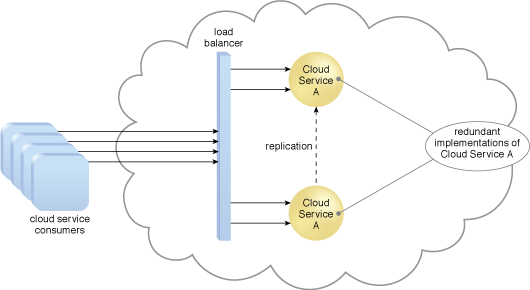

The load balancer mechanism is a runtime agent with logic fundamentally based on the premise of employing horizontal scaling to balance a workload across two or more IT resources to increase performance and capacity beyond what a single IT resource can provide. Beyond simple division of labor algorithms (Figure 1), load balancers can perform a range of specialized runtime workload distribution functions that include:

- Asymmetric Distribution – larger workloads are issued to IT resources with higher processing capacities

- Workload Prioritization – workloads are scheduled, queued, discarded, and distributed workloads according to their priority levels

- Content-Aware Distribution – requests are distributed to different IT resources as dictated by the request content

Figure 1 – A load balancer implemented as a service agent transparently distributes incoming workload request messages across two redundant cloud service implementations, which in turn maximizes performance for the clouds service consumers.

A load balancer is programmed or configured with a set of performance and QoS rules and parameters with the general objectives of optimizing IT resource usage, avoiding overloads, and maximizing throughput.

The load balancer mechanisms can exist as a:

- multi-layer network switch

- dedicated hardware appliance

- dedicated software-based system (common in server operating systems)

- service agent (usually controlled by cloud management software)

The load balancer is typically located on the communication path between the IT resources generating the workload and the IT resources performing the workload processing. This mechanism can be designed as a transparent agent that remains hidden from the cloud service consumers, or as a proxy component that abstracts the IT resources performing their workload.

Related Patterns:

- Load Balanced Virtual Server Instances

- Load Balanced Virtual Switches

- Micro Scatter-Gather

- Service Load Balancing

- Storage Workload Management

- Usage Monitoring

- Workload Distribution

This mechanism is covered in CCP Module 4: Fundamental Cloud Architecture.

For more information regarding the Cloud Certified Professional (CCP) curriculum, visit www.arcitura.com/ccp.

This cloud computing mechanism is covered in:

Cloud Computing: Concepts, Technology & Architecture by Thomas Erl, Zaigham Mahmood,

Ricardo Puttini

(ISBN: 9780133387520, Hardcover, 260+ Illustrations, 528 pages)

For more information about this book, visit www.arcitura.com/books.