Big Data Patterns, Mechanisms > Data Processing Patterns > Complex Logic Decomposition

Complex Logic Decomposition (Buhler, Erl, Khattak)

How can complex processing tasks be carried out in a manageable fashion when using contemporary processing techniques?

Problem

Solution

Application

Mechanisms

Separate processing routines are developed such that each routine implements simple logic. Each routine is then run as a separate processing run of a processing engine like MapReduce. Through configuration, the output of the first processing run is piped to the second processing run and so on. The output of the last processing run is the required final output.

As the application of the Complex Logic Decomposition pattern results in the creation of multiple processing routines, modifications to any of the routines need to be properly documented because modifications to one routine may change the behavior of the other routine. Based on the functionality supported by the processing engine, the Automated Dataset Execution pattern may need to be applied in order to connect together multiple processing runs.

The divide-and-conquer rule is applied by rethinking the complex logic, which needs to be executed as a monolithic task, in terms of combinable multiple smaller tasks where each task executes comparatively simpler logic. These tasks are then chained together to obtain the final output, as envisioned though the use of the original complex logic.

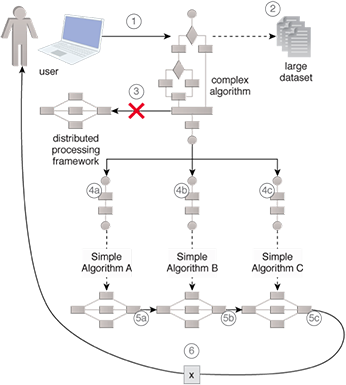

- A user develops a complex algorithm to compute a statistic.

- The user needs to apply the algorithm to a large dataset.

- The user chooses to implement the complex algorithm using a distributed processing framework. However, due to the stateless nature of the framework, the user is unable to figure out how to implement the algorithm as a single processing run.

- (a,b,c) To enable the implementation, the user splits the complex algorithm into simple Algorithms A, B and C.

- (a,b,c) The three simple algorithms are then executed as three separate processing runs, such that the output of the first processing run becomes the input of the second processing run and the output of the second processing run becomes the output of the third processing run.

- The output of the last processing run is the required statistic that is returned to the user.

This pattern is covered in BDSCP Module 10: Fundamental Big Data Architecture.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.

The official textbook for the BDSCP curriculum is:

Big Data Fundamentals: Concepts, Drivers & Techniques

by Paul Buhler, PhD, Thomas Erl, Wajid Khattak

(ISBN: 9780134291079, Paperback, 218 pages)

Please note that this textbook covers fundamental topics only and does not cover design patterns.

For more information about this book, visit www.arcitura.com/books.