Big Data Patterns, Mechanisms > Mechanisms > Workflow Engine

Workflow Engine

![]()

The ability to query data and perform ETL operations via the query engine is useful for ad-hoc data analysis. However, performing the same set of operations in a particular order repeatedly is often required in order to obtain up-to-date results based on the latest data. A workflow engine provides the ability to design and process a complex sequence of operations that can be triggered either at set time intervals or when data becomes available.

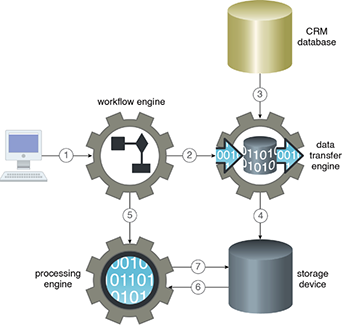

The workflow logic processed by a workflow engine can involve the participation of other Big Data mechanisms, as shown in Figure 1. For example, a workflow engine can execute logic that collects relational data from multiple databases at regular intervals via the data transfer engine, applies a set of ETL operations via the processing engine, and finally persists the results to a NoSQL storage device.

The defined workflows are analogous to a flowchart with control logic, such as decisions, forks, joins, and generally rely on a batch-style processing engine for execution. The output of one workflow can become the input of another workflow.

Figure 1 – A client first creates a workflow job using the workflow engine (1). As the first step of the configuration job, the workflow engine triggers a data ingress job (2), which is executed by the data transfer engine in the form of a data import from a CRM database (3). The imported data is then persisted in the storage device (4). As part of the second step of the configuration job, the workflow engine then triggers the processing engine for the execution of a data processing job (5). In response, the processing engine retrieves the required data from the storage device (6), executes the data processing job, and then persists the results back to the storage device (7).

Related Patterns:

This pattern is covered in BDSCP Module 2: Big Data Analysis & Technology Concepts.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.