Big Data Patterns, Mechanisms > Data Processing Patterns > Automatic Data Replication and Reconstruction

Automatic Data Replication and Reconstruction (Buhler, Erl, Khattak)

How can large amounts of data be stored in a fault tolerant manner such that the data remains available in the face of hardware failures?

Problem

Solution

Application

Mechanisms

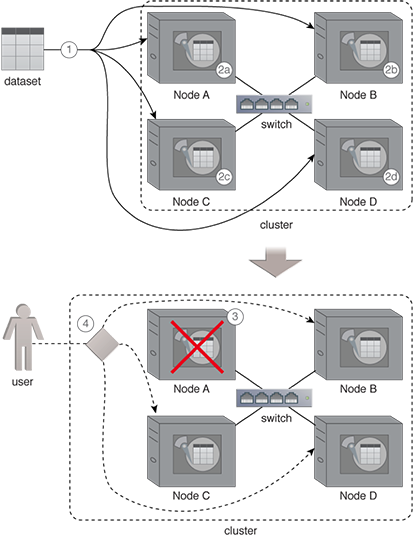

The dataset that needs to be stored is automatically copied across multiple machines in a cluster. This way, if a machine becomes unavailable, data can still be accessed from a different machine. Furthermore, functionality is added to automatically reconstruct a copy of data that was lost due to machine failure. Apart from providing fault-tolerance and high availability, the application of the Automatic Data Replication and Reconstruction pattern further provides high performance data access and enables scaling-out.

- A dataset is saved using a distributed file system.

- (a,b,c,d) The distributed file system automatically creates four copies of the dataset and saves the copies to Nodes A, B, C and D of a cluster.

- Due to a hardware failure, Node A becomes unavailable.

- When a user tries to read the dataset, the operation succeeds as the user is seamlessly directed to any of the available Nodes B, C or D.

This pattern is covered in BDSCP Module 10: Fundamental Big Data Architecture.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.

The official textbook for the BDSCP curriculum is:

Big Data Fundamentals: Concepts, Drivers & Techniques

by Paul Buhler, PhD, Thomas Erl, Wajid Khattak

(ISBN: 9780134291079, Paperback, 218 pages)

Please note that this textbook covers fundamental topics only and does not cover design patterns.

For more information about this book, visit www.arcitura.com/books.