Big Data Patterns, Mechanisms > Data Transfer and Transformation Patterns > Canonical Data Format

Canonical Data Format (Buhler, Erl, Khattak)

How can the same dataset be consumed by disparate client programs?

Problem

Solution

Application

Mechanisms

An interoperable data encoding scheme is selected and set as the de facto serialization scheme for serializing data within the Big Data platform. Furthermore, a serialization engine that is capable of encoding and decoding data using the standardized encoding scheme is used. To save time and avoid unnecessary use of processing resources, the data transfer engine can be configured to output the data using the standardized encoding scheme. In other circumstances, especially in case of relational data or datasets acquired from third-party data sources, such as data markets, the datasets need to be processed to shape the data into the required format based on the standardized encoding scheme.

A canonical and extensible serialization format is chosen to save data such that disparate clients are able to read and write data. This saves from having to perform any data format conversion or keeping multiple copies of a dataset in different formats. The canonical serialization format is generally based on a schema-driven format that provides information about the structure of the data.

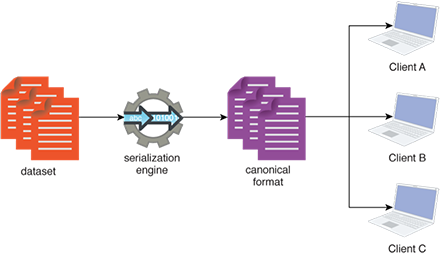

A dataset is serialized into a common format that is then consumed by three disparate clients without the need to perform any data format conversion.

This pattern is covered in BDSCP Module 11: Advanced Big Data Architecture.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.

The official textbook for the BDSCP curriculum is:

Big Data Fundamentals: Concepts, Drivers & Techniques

by Paul Buhler, PhD, Thomas Erl, Wajid Khattak

(ISBN: 9780134291079, Paperback, 218 pages)

Please note that this textbook covers fundamental topics only and does not cover design patterns.

For more information about this book, visit www.arcitura.com/books.