Big Data Patterns, Mechanisms > Data Source Patterns > Streaming Source

Streaming Source (Buhler, Erl, Khattak)

How can high velocity data be imported reliably into a Big Data platform in realtime?

Problem

Solution

Application

Mechanisms

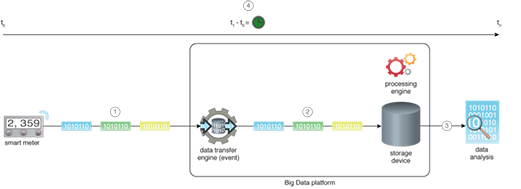

An event data transfer engine mechanism is introduced within the Big Data platform. The event data transfer engine is configured to specify the data sources and the destination. Once configured, the event data transfer engine automatically ingests events as they are generated by the source. Once an event is ingested, it is published to the configured subscribers. A queue is generally used to store the events, providing fault-tolerance and scalability.

This pattern is generally applied together with the Realtime Access Storage and High Velocity Realtime Processing patterns.

Instead of collating the individual data events as a file, a system is implemented that captures the events as they are produced by the data source and forwarded to the Big Data platform for instant processing. Doing so enables realtime capture of data without incurring any delay.

- Individual readings transmitted by a smart meter every 30 seconds are captured by an event data transfer engine.

- Each event is imported into the Big Data platform as it gets captured by the event data transfer engine.

- Data is then analyzed to find insights.

- The whole process takes a very short time to execute.

This pattern is covered in BDSCP Module 10: Fundamental Big Data Architecture.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.

The official textbook for the BDSCP curriculum is:

Big Data Fundamentals: Concepts, Drivers & Techniques

by Paul Buhler, PhD, Thomas Erl, Wajid Khattak

(ISBN: 9780134291079, Paperback, 218 pages)

Please note that this textbook covers fundamental topics only and does not cover design patterns.

For more information about this book, visit www.arcitura.com/books.