Big Data Patterns, Mechanisms > Data Source Patterns > Dataset Decomposition

Dataset Decomposition (Buhler, Erl, Khattak)

How can a large dataset be made amenable to distributed data processing in a Big Data solution environment?

Problem

Solution

Application

Mechanisms

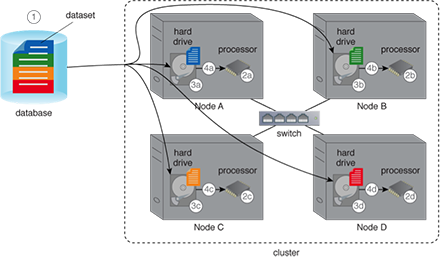

A distributed file system storage device is employed that automatically divides a large file into multiple smaller sub-files and stores them across the cluster. When a processing engine, such as MapReduce, needs to process data, each sub-file is read independently to implement distributed data processing. All sub-files are automatically stitched together when it needs to be read in a streaming manner or when it needs to be copied to a different storage technology.

The large dataset is automatically split into multiple datasets and stored across multiple nodes in the cluster. Each sub-dataset can then be separately accessed by the processing engine. If the file needs to be exported, all parts are automatically joined together in the correct order to get the original file.

- A large dataset is saved as a single file at a central location.

- (a,b,c,d) The dataset needs to be processed using a processing engine deployed over a cluster.

- (a,b,c,d) To enable distributed data processing, the dataset is imported to a distributed file system that automatically breaks the dataset into smaller datasets spread across the cluster.

- (a,b,c,d) The dataset is now successfully processed by the processing engine.

This pattern is covered in BDSCP Module 10: Fundamental Big Data Architecture.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.

The official textbook for the BDSCP curriculum is:

Big Data Fundamentals: Concepts, Drivers & Techniques

by Paul Buhler, PhD, Thomas Erl, Wajid Khattak

(ISBN: 9780134291079, Paperback, 218 pages)

Please note that this textbook covers fundamental topics only and does not cover design patterns.

For more information about this book, visit www.arcitura.com/books.