Big Data Patterns, Mechanisms > Data Processing Patterns > Automated Dataset Execution

Automated Dataset Execution (Buhler, Erl, Khattak)

How can the execution of a number of data processing activities starting from data ingress to egress be automated?

Problem

Solution

Application

Mechanisms

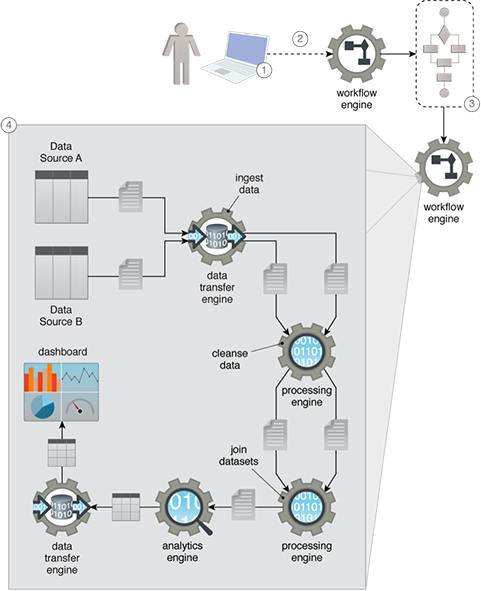

A workflow engine mechanism is used for creating and executing a workflow. Based on the interface provided by the workflow engine, either a markup language or a graphical user interface (GUI), the user specifies each operation that needs to be performed for achieving the required end result. Once the workflow is created, it is automatically executed by the workflow engine by calling the respective Big Data mechanism in turn that is responsible for executing a particular workflow step.

The productivity achieved through the application of the Automated Dataset Execution pattern depends upon how many different types of data processing operations can be automated by the workflow engine, which translates into how many different types of Big Data mechanisms can be invoked by the workflow engine. An extensible workflow engine needs to be chosen that provides extension points for future integration.

The set of operations that need to be executed are specified in the form of a flowchart. The entire flowchart is then automatically executed without requiring human intervention. This results in a configure-once, execute-often solution.

- A user needs to acquire two datasets, cleanse them, join them together, apply a machine learning algorithm to the joined data and then export the results to a dashboard.

- The user uses a workflow engine to create a workflow of all required activities via the workflow engine.

- The workflow engine generates a flowchart of activities that need executing.

- The entire set of activities from data ingest to egress is then scheduled and automatically executed by the workflow engine by calling the required Big Data mechanisms in turn to perform the configured activities.

This pattern is covered in BDSCP Module 10: Fundamental Big Data Architecture.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.

The official textbook for the BDSCP curriculum is:

Big Data Fundamentals: Concepts, Drivers & Techniques

by Paul Buhler, PhD, Thomas Erl, Wajid Khattak

(ISBN: 9780134291079, Paperback, 218 pages)

Please note that this textbook covers fundamental topics only and does not cover design patterns.

For more information about this book, visit www.arcitura.com/books.