Big Data Patterns, Mechanisms > Data Processing Patterns > Large-Scale Batch Processing

Large-Scale Batch Processing (Buhler, Erl, Khattak)

How can very large amounts of data be processed with maximum throughput?

Problem

Solution

Application

Mechanisms

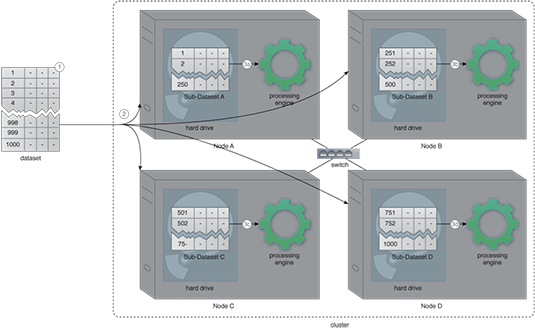

For scenarios where a large dataset is not available, data is first amassed into a large dataset. Once a large dataset is available, it is saved into a disk-based storage device that automatically splits the dataset into multiple smaller datasets and then saves them across multiple machines in a cluster. A batch processing engine, such as MapReduce, is then used to process data in a distributed manner. Internally, the batch processing engine processes each sub-dataset individually and in parallel, such that the sub-dataset residing on a certain node is generally processed by the same node. This saves from having to move data to the computation resource. It should be noted that depending upon the availability of processing resources, under certain circumstances, a sub-dataset may need to be moved to a different machine that has available processing resources.

The process of splitting up the large dataset into smaller datasets and distributing them across the cluster is generally accomplished by the application of the Dataset Decomposition pattern.

A contemporary data processing framework based on a distributed architecture is used to process data in a batch fashion. Employing a distributed batch processing framework enables processing very large amounts of data in a timely manner. Furthermore, such a solution is simple to develop and inexpensive as well.

- A dataset consisting of a large number of records needs to be processed.

- The dataset is saved to a distributed file system (highlighted in blue in the diagram) that automatically splits the dataset and saves sub-datasets across the cluster.

- (a,b,c,d) A batch processing engine (highlighted in green in the diagram) is used to process the each sub-dataset in place, without moving it to a different location.

This pattern is covered in BDSCP Module 10: Fundamental Big Data Architecture.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.

The official textbook for the BDSCP curriculum is:

Big Data Fundamentals: Concepts, Drivers & Techniques

by Paul Buhler, PhD, Thomas Erl, Wajid Khattak

(ISBN: 9780134291079, Paperback, 218 pages)

Please note that this textbook covers fundamental topics only and does not cover design patterns.

For more information about this book, visit www.arcitura.com/books.