Big Data Patterns, Mechanisms > Storage Patterns > High Volume Binary Storage

High Volume Binary Storage (Buhler, Erl, Khattak)

How can a variety of unstructured data be stored in a scalable manner such that it can be randomly accessed based on a unique identifier?

Problem

Solution

Application

Mechanisms

A key-value NoSQL data is introduced within the Big Data platform. Such a database generally provides API-based access for inserting, selecting and deleting data without any support for partial updates, as the database has no inner knowledge about the structure of the data it stores. Such a NoSQL database is good for storing large amounts of data in its raw form because all of the data gets stored as a binary object. Furthermore, a key-value NoSQL database can also be utilized where the use case involves high-speed read and write operations.

Apart from a generic disk-based, NoSQL and key-value database, a memory-based storage device, such as a memory grid that provides key-value storage, can also be used to gain the same functionality with the added benefit of low latency data access.

It should be noted that the application of the High Volume Binary Storage pattern delegates the responsibility of interpreting (serialization/deserialization) the data to the client that reads the data. Hence, the successful read of the data by any client requires knowledge about the nature of the data being stored. Also, as the access is only possible via the key, some logical key naming nomenclature may need to be implemented for quick retrieval of the required data units.

A contemporary database solution is implemented that supports scaling out and stores data as a binary large object (BLOB) that can be accessed based on an identifier.

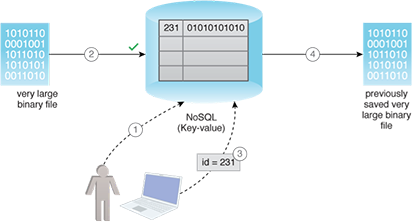

- A user tries to import a very large binary file into a key-value NoSQL database.

- The operation succeeds and the database assigns a key to the stored file.

- The user later requests the database for data with the same key.

- The previously stored, very large binary file is returned to the user in its original format.

This pattern is covered in BDSCP Module 10: Fundamental Big Data Architecture.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.

The official textbook for the BDSCP curriculum is:

Big Data Fundamentals: Concepts, Drivers & Techniques

by Paul Buhler, PhD, Thomas Erl, Wajid Khattak

(ISBN: 9780134291079, Paperback, 218 pages)

Please note that this textbook covers fundamental topics only and does not cover design patterns.

For more information about this book, visit www.arcitura.com/books.