Big Data Patterns, Mechanisms > Data Transfer and Transformation Patterns > Fan-in Ingress

Fan-in Ingress (Buhler, Erl, Khattak)

How can data arriving from multiple instances of a data source be collected in an efficient manner?

Problem

Solution

Application

Mechanisms

An event data transfer engine is used to collect multiple streams that originate from multiple instances of the same data source, such as a temperature sensor located across the perimeter of a factory floor. The collected streams are then joined together to create a single stream. The event data transfer engine provides automatic, inflight stream consolidation to eliminate the need of manual consolidation that first requires capturing each stream and then merging them together, which incurs processing latency.

This pattern is applicable under different scenarios. For example, the events emitted by multiple data source instances may not be in the order as required by the data analysis algorithm, or, due to network delay, the events may be out of order. In other scenarios, due to the sheer number of streams and limited available processing capacity, events originating from different instances of the same type of data source are simply added together to create a single event. This pattern can further be extended to create a single event from multiple events of the same data source that fall within a particular range, such as a time interval, and further consolidate with coarse-grained events from the rest of the data source instances. Furthermore, this pattern can be applied together with the Automated Processing Metadata Insertion pattern for adding traceability of the specific data source from where the stream originated.

Date streams are consolidated into a single or manageable number of streams by applying analysis-specific consolidation logic. The process of consolidation generally takes place within the component that captures the different streams of data. The application of the Fan-in Ingress pattern helps to develop a simple data processing solution and keeps resource utilization to a minimum.

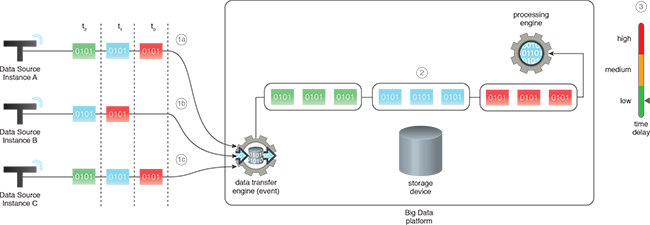

In the diagram, three different instances of a data source produce out-of-sync data streams that need processing in realtime. The streams are collected and processed as follows:

- (a,b,c) Data from Instances A, B and C is collected by the event data transfer engine.

- The event data transfer engine orders the events as required, which are then processed collectively by the processing engine.

- This results in a low data processing latency.

This pattern is covered in BDSCP Module 11: Advanced Big Data Architecture.

For more information regarding the Big Data Science Certified Professional (BDSCP) curriculum,

visit www.arcitura.com/bdscp.

The official textbook for the BDSCP curriculum is:

Big Data Fundamentals: Concepts, Drivers & Techniques

by Paul Buhler, PhD, Thomas Erl, Wajid Khattak

(ISBN: 9780134291079, Paperback, 218 pages)

Please note that this textbook covers fundamental topics only and does not cover design patterns.

For more information about this book, visit www.arcitura.com/books.